Something unusual, and rather unwelcome, is afoot with the graphics options in the newly released first episode of Hitman. According to reports (accompanied by verifying screenshots) from players with lower-end hardware, the game is artificially greying out certain choices. It seems the game is checking player PCs, and then restricting texture quality, resolution, and super-sampling if things like VRAM aren’t hitting defined parameters.

This isn’t something either Tim or myself ran into at all (he just upgraded his GPU, and I did my whole PC), so it wasn’t covered by the review. As an additional aside, I can clarify from experience that 4GB of VRAM (not 6GB, as some have speculated) is enough to use ‘High’ textures.

Still, even though I wasn’t affected, I can empathise with those who were. Making automated, hardware-based choices about what graphics options players are ‘allowed’ to select seems unnecessarily restrictive. PC games at their best are about flexibility, customisation, and, damnit, making absurdly optimistic graphics choices and running the game at 8fps if people really feel like it (or, as the gentleman in the Reddit post linked above likes to do, take 4K screenshots).

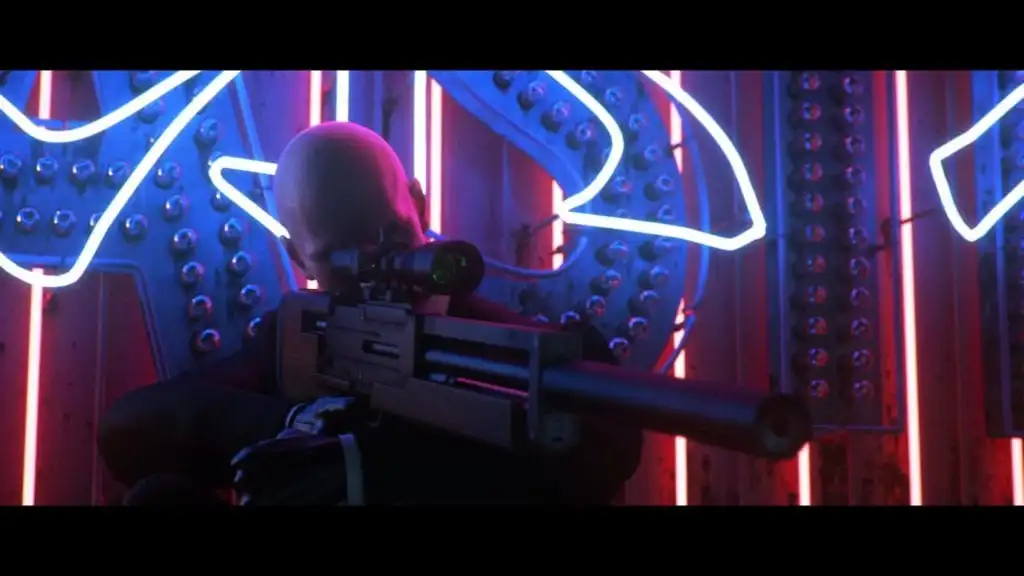

This is what everybody should be able to see, if they really want.

To poke around inside the minds of IO Interactive for a moment, I presume this decision came from irritation at seeing one too many Steam rants about “optimisation!!!” from people using PCs that require carbon dating to determine their origins. The land of PC is a wonderful place, but it can also be a very confusing and frustrating one. Without a mini-encyclopedia of personal knowledge about things like the basic definition and performance cost of ‘anisotropic filtering’, or the perils of Gameworks if you own an AMD card, fiddling around with graphics options can be a daunting task.

The solution, though, isn’t to treat people like permanent, tech-illiterate fools and lock out various options. That’s just going to generate a different sort of anger to the one IO were attempting to bypass, and probably just as many Hitman refund requests.

First of all, implementing automated hardware checks is going to result in a lot of false positives. The range and diversity in PC hardware is such that just going “ehh, you only have 3GB of VRAM, don’t even bother with better textures” is a rather blunt approach. We’ve all got stories and anecdotes about managing to acceptably run demanding games on older machines. Call of Duty: Ghosts had to patch out a lock that prevented systems with less than 6GB RAM running it on PC, when it turned out it could run just fine with less. I understand that you can’t magically make super-high-res textures work on GPUs from the Bronze Age, but those in the VRAM margins might have a chance. Sometimes an aging PC surprises you.

Imagine if this was the first PC graphics menu you’d ever seen, you’d probably just scream and pass out.

But further to that, there are perfectly sound existing methods for guiding your player-base toward system appropriate options, and most of them involve educating rather than restricting. ‘Auto-detect’ type options are imperfect too, but they provide a baseline from which players can test their luck and find tolerable settings.

Other visual aides, like VRAM bars that gradually fill up as you select options (as seen in things like GTA V) and little explanations of what individual settings actually are (which, thankfully, The Division’s packed menu had), are great at informing and guiding players without denying them options outright. Most people can understand that the VRAM bar being a touch over the limit might be worth risking, but picking options that send it sky-rocketing beyond a card’s boundaries is probably going to end poorly. Ideally, you also incorporate estimates of how each particular setting will impact overall performance (as I think Shadow of Mordor did).

If you put that kind of information directly in front of people and they still complain when their five year old graphics card can’t max things out at 60fps, then they’re the idiots. If you prejudge them as morons and restrict things from the outset, then you’re just the priggish developer who isn’t letting people experiment with the limitations of their own PCs.

Gonna need to see some VRAM ID before I let you have any more textures, sir.

I’m sure workarounds for Hitman will be out there soon (if they’re not already), but that’s not really the point. Providing players with information and trusting them to learn something about performance limitations is a much more positive, PC-friendly approach than assuming people will never learn and selecting the ‘best’ settings for them by artificial force. It’s a shame IO feel otherwise, and hopefully Hitman will get a CoD: Ghosts style patch to lift some of these restrictions.

Published: Mar 12, 2016 05:57 am